A/B Testing Email Campaigns: Complete Guide for Higher Conversions

Master email A/B testing in 2025 with proven strategies. Complete guide with actionable frameworks to boost open rates and conversions.

Are you sending email campaigns and wondering why some perform better than others? We've analyzed thousands of email campaigns at Groupmail, and the difference between high-performing and mediocre emails often comes down to systematic testing.

Most businesses send emails based on gut feelings rather than data. They choose subject lines they "think" will work, pick send times that seem convenient, and wonder why their open rates plateau at industry averages. According to Campaign Monitor's email marketing benchmarks, businesses that regularly A/B test their emails see 37% higher open rates and 49% better click-through rates than those who don't.

The challenge? Most email marketers either skip testing entirely or run tests that produce misleading results. We've seen businesses make costly decisions based on tests with too-small sample sizes, testing multiple variables simultaneously, or drawing conclusions too quickly.

📊 Testing Impact: Companies using systematic email A/B testing report 23% higher revenue per email compared to those sending untested campaigns, according to Litmus State of Email report.

TL;DR - Key Takeaways:

- A/B testing email campaigns can increase open rates by 37% and click-through rates by 49%

- Test one variable at a time for reliable results: subject line, send time, content, or call-to-action

- Require minimum 1,000 recipients per test variant for statistical significance

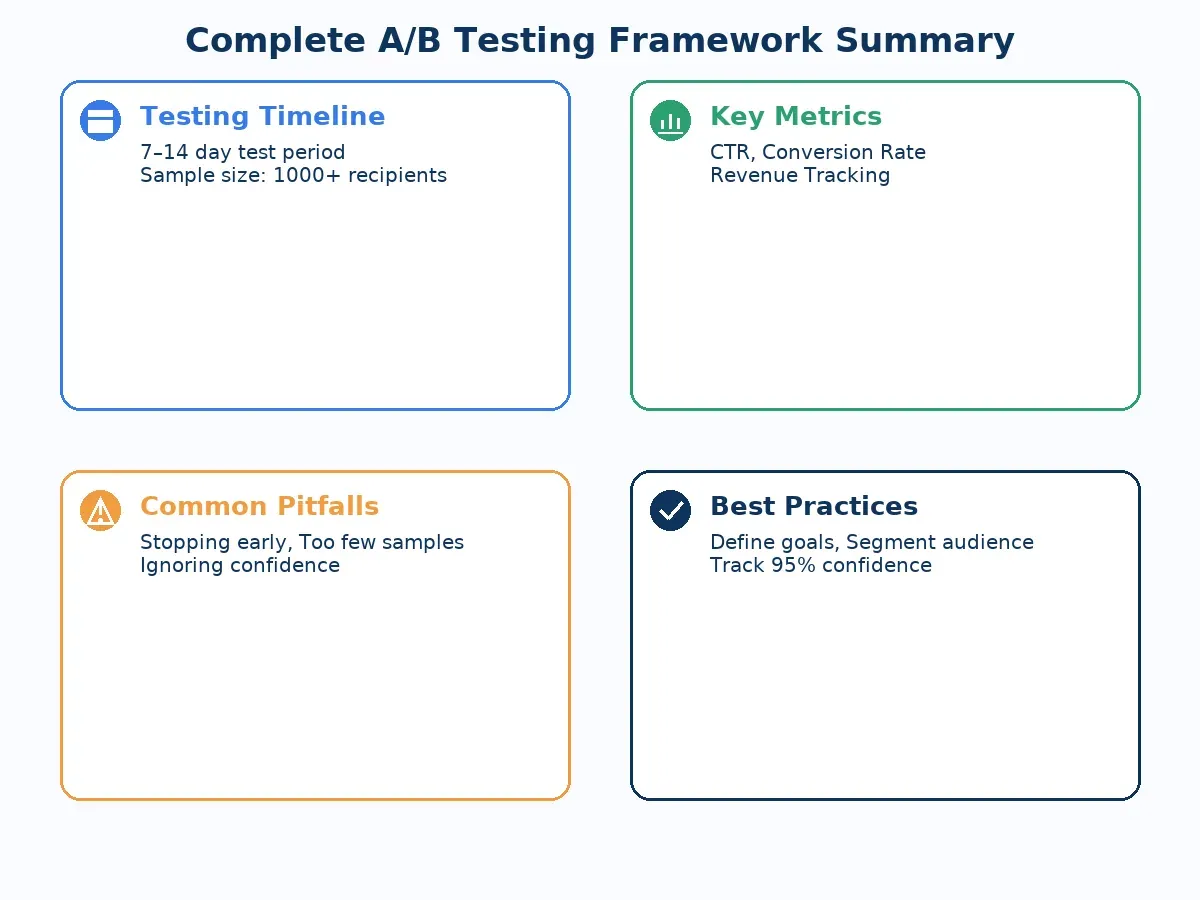

- Run tests for full business cycles (typically 7-14 days) before drawing conclusions

- Focus on metrics that impact revenue: click-through rates and conversions, not just open rates

What Is Email A/B Testing and Why It Matters

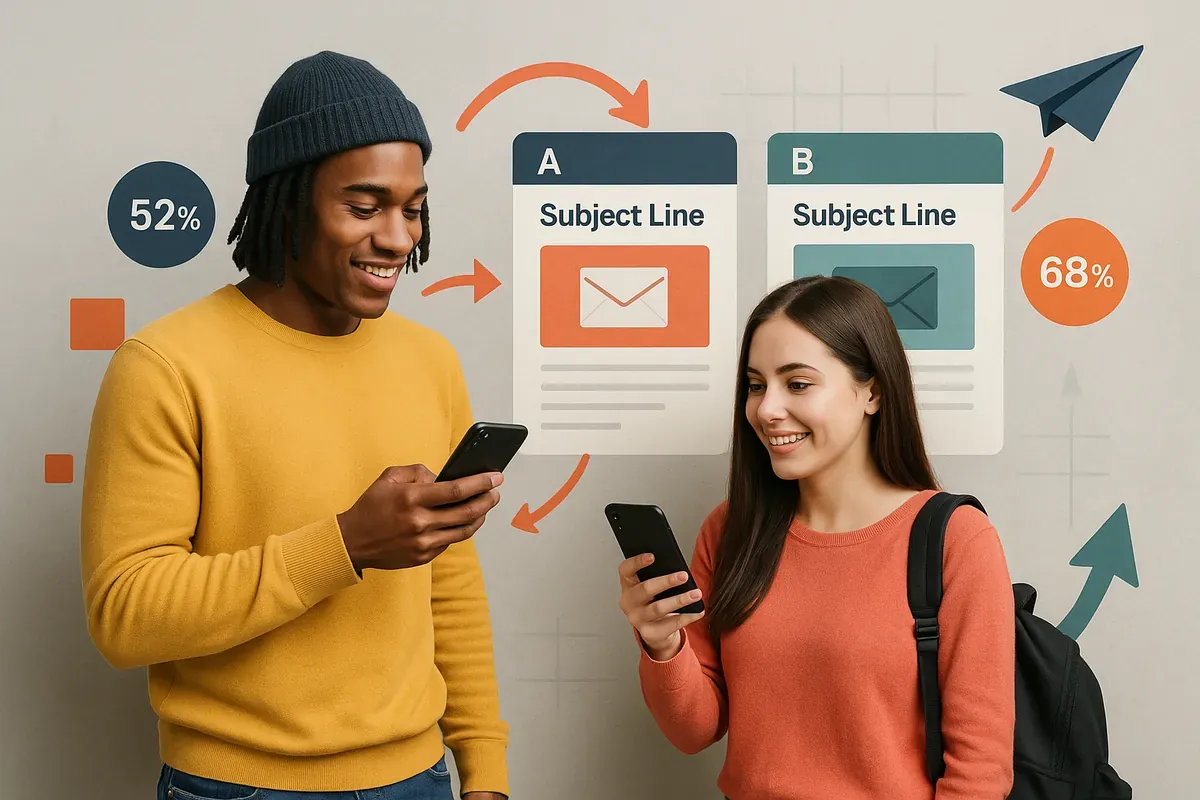

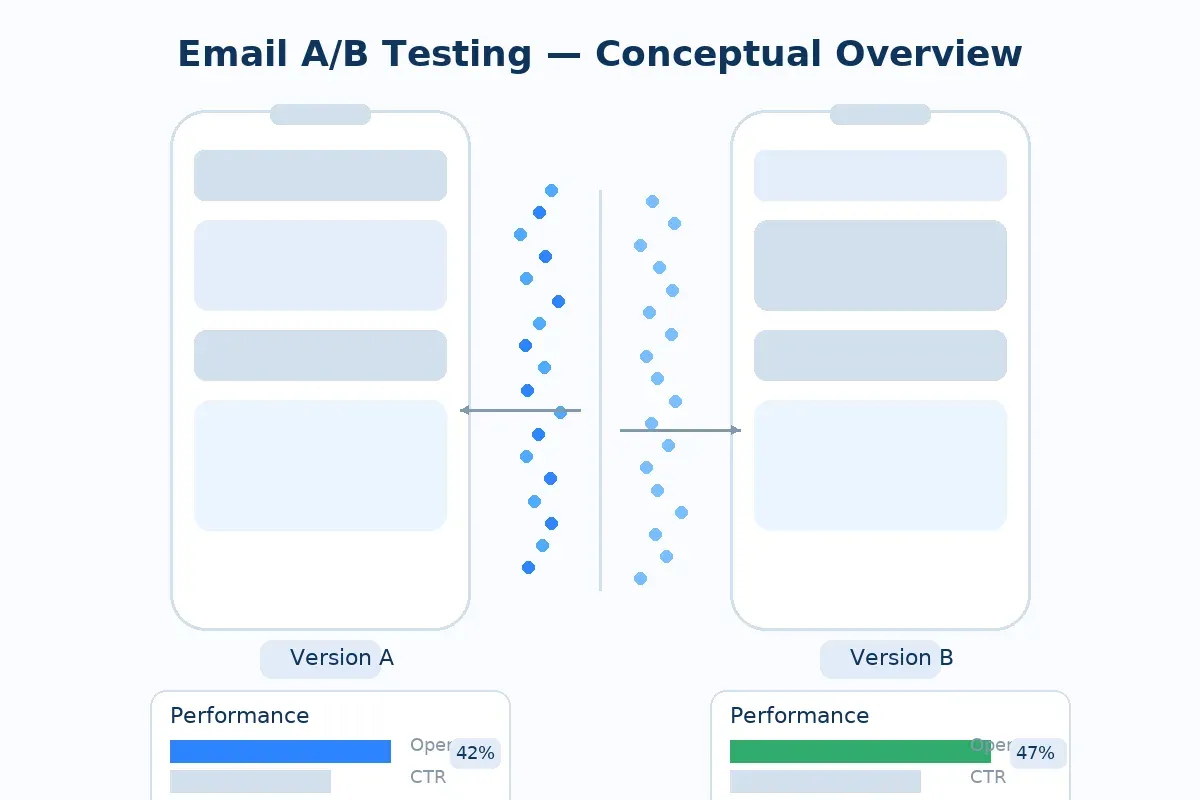

Quick Answer: Email A/B testing involves sending two variations of an email campaign to different segments of your audience to determine which version performs better. This data-driven approach helps optimize open rates, click-through rates, and conversions by testing elements like subject lines, content, and send times.

Email A/B testing, also called split testing, is the practice of comparing two versions of an email to see which one achieves better results. Version A might have "Save 20% Today" as the subject line, while Version B uses "Limited Time: Your 20% Discount Inside."

We've found this approach transforms email marketing from guesswork into science. Instead of debating whether "Buy Now" or "Shop Today" works better as a call-to-action, you test both and let your audience decide.

The methodology is straightforward: you split your email list into random segments, send each segment a different version, then measure which version generates better results based on your primary goal—whether that's opens, clicks, or conversions.

💡 Pro Tip: Start with high-impact, easy-to-test elements like subject lines before moving to complex content variations. This builds your testing confidence and delivers quick wins.

The business impact extends beyond individual campaigns. Harvard Business Review research shows that companies with strong testing cultures grow 30% faster than competitors. In email marketing, this translates to higher lifetime customer value and lower acquisition costs.

Ready to implement this? Try Groupmail's drag-and-drop builder free—unlimited sending included.

Essential Elements to A/B Test in Email Campaigns

Quick Answer: The most impactful email A/B testing elements include subject lines (affecting 35% of open decisions), send times (up to 23% performance variation), preview text, from names, call-to-action buttons, and email content structure. Start with subject lines for immediate results, then expand to other elements systematically.

Subject Lines: Your Biggest Opportunity

Subject lines represent the highest-impact testing opportunity because they directly influence open rates. We recommend testing these variations:

Length variations: Short (under 30 characters) versus longer descriptive lines Emotional triggers: Urgency ("Last chance") versus curiosity ("You won't believe this")

Personalization: Generic versus name/location personalization Question format: Statement versus question format

Send Time and Day Optimization

Email timing significantly impacts performance, but optimal times vary by industry and audience. Mailchimp's research on email timing reveals massive variations between B2B and B2C audiences.

Test these timing variables:

- Morning (6-10 AM) versus afternoon (2-5 PM) sends

- Weekdays versus weekend delivery

- Business hours versus evening sends

- Holiday periods versus regular weeks

From Name and Preview Text

The sender name appears alongside your subject line in most email clients, making it crucial for open rates. Test:

- Personal name ("Sarah from Groupmail") versus company name ("Groupmail Team")

- Department-specific senders ("Groupmail Support" versus "Groupmail Marketing")

- CEO/founder name versus generic business name

Preview text, the snippet shown after the subject line, offers another testing opportunity. Litmus preview text researchshows optimized preview text can increase open rates by 15%.

📈 Performance Data: Personalized from names increase open rates by an average of 22%, while optimized preview text adds another 8-15% boost according to email deliverability studies.

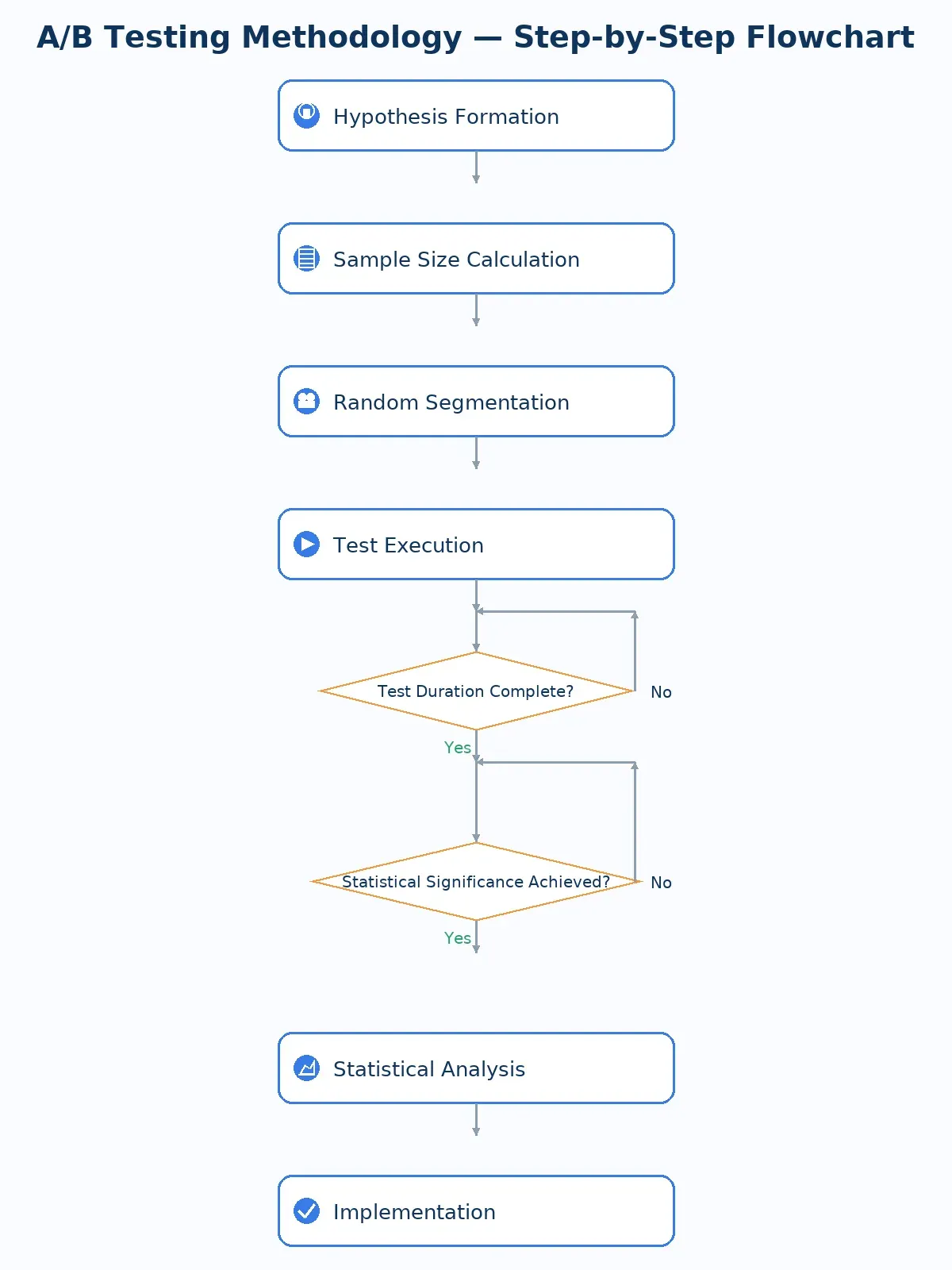

Setting Up Statistically Valid Email A/B Tests

Quick Answer: Valid email A/B testing requires minimum 1,000 recipients per variant, testing one variable at a time, running tests for complete business cycles (7-14 days), and achieving 95% statistical confidence before implementing changes. Poor test design leads to false conclusions and wasted opportunities.

Sample Size Requirements

The biggest mistake we see is testing with insufficient sample sizes. Statistics show you need minimum 1,000 recipients per test variant to detect a 20% improvement with 95% confidence.

Here's our recommended sample size framework:

| List Size | Test Split | Minimum Per Variant | Recommended Test |

|---|---|---|---|

| Under 2,000 | Don't test | - | Build list first |

| 2,000-5,000 | 50/50 split | 1,000-2,500 | Subject line only |

| 5,000-20,000 | 20/20/60 split | 1,000-4,000 | Two variables max |

| 20,000+ | 10/10/80 split | 2,000+ | Multiple tests |

Testing One Variable at a Time

Multivariate testing sounds appealing but creates confusion. If you simultaneously test subject line, send time, and call-to-action, you won't know which variable caused performance changes.

Our systematic approach:

- Week 1: Test subject line variations

- Week 2: Test send time with winning subject line

- Week 3: Test content variations with optimized timing

- Week 4: Test call-to-action with proven elements

Statistical Significance Standards

Don't call winners too early. Marketing research standards require 95% confidence levels for reliable conclusions.

Use these benchmarks:

- Minimum confidence level: 95%

- Minimum test duration: One complete business cycle (7-14 days)

- Sample size calculation: Use online calculators before launching tests

🔒 Data Quality: Always segment test groups randomly to avoid bias. Manual selection introduces unconscious preferences that skew results, according to behavioral economics research.

At Groupmail, our campaign analytics dashboard automatically calculates statistical significance and recommends when to conclude tests, removing guesswork from the process.

Advanced Testing Strategies That Drive Results

Quick Answer: Advanced email A/B testing includes multivariate testing for large lists, sequential testing programs, audience segmentation tests, and revenue-focused metrics beyond open rates. These strategies require larger sample sizes but deliver deeper insights and higher ROI improvements.

Sequential Testing Programs

Rather than isolated tests, create systematic testing programs that build upon previous results. We've found this approach delivers compounding improvements over time.

Quarter 1 Focus: Foundation elements

- Subject line optimization

- Send time testing

- From name variations

Quarter 2 Focus: Content optimization

- Email length testing

- Image versus text-heavy designs

- Call-to-action placement and wording

Quarter 3 Focus: Advanced segmentation

- Demographic-based content

- Behavior-triggered variations

- Purchase history personalization

Quarter 4 Focus: Revenue optimization

- Pricing presentation tests

- Offer format variations

- Cross-sell and upsell strategies

Audience Segmentation Testing

Different audience segments often respond to different approaches. Epsilon's personalization research shows segmented emails generate 58% higher revenue than unsegmented broadcasts.

Test these audience dimensions:

- Demographics: Age groups, gender, location

- Behavior: Purchase frequency, engagement level, browse behavior

- Customer lifecycle: New subscribers, repeat customers, lapsed users

- Industry/interests: Relevant for B2B segmentation

Revenue-Focused Testing Metrics

Open rates grab attention, but revenue metrics determine business success. Focus testing on metrics that directly impact your bottom line:

Primary metrics:

- Click-through rate (CTR)

- Conversion rate

- Revenue per email

- Customer lifetime value impact

Secondary metrics:

- Unsubscribe rate

- Forward/share rate

- Time spent on landing page

- Multiple email engagement

💰 Business Impact: Revenue-focused testing typically improves email ROI by 35-60% compared to open rate optimization alone, according to email marketing effectiveness studies.

Common A/B Testing Mistakes and How to Avoid Them

Quick Answer: The most costly email A/B testing mistakes include insufficient sample sizes, testing multiple variables simultaneously, calling winners too early, and focusing only on open rates rather than revenue metrics. Avoiding these errors prevents false conclusions and maximizes testing ROI.

Mistake 1: Insufficient Sample Sizes

We regularly see businesses excited to test with 200-person email lists. Unfortunately, small samples produce unreliable results that lead to poor decisions.

The problem: A 15% open rate difference between variants might seem significant, but with small samples, this often represents random variation rather than true performance differences.

The solution: Use online sample size calculators before launching tests. For most email tests, you'll need minimum 1,000 recipients per variant to detect meaningful differences.

Mistake 2: Testing Everything at Once

Multivariate testing appeals to time-pressed marketers who want fast answers. However, testing subject line, send time, and content simultaneously creates confusion about which change drove results.

Better approach: Test systematically:

- Month 1: Subject line variations

- Month 2: Send time optimization

- Month 3: Content format testing

- Month 4: Call-to-action optimization

Mistake 3: Calling Winners Too Early

Impatience kills valid testing. We've seen businesses declare winners after 24 hours, missing how performance changes over longer periods.

Weekend effect example: An email sent Thursday might show higher Friday performance, but Monday engagement could tell a different story. Email timing research confirms performance varies significantly across multi-day periods.

Solution: Run tests for complete business cycles, typically 7-14 days, before drawing conclusions.

Mistake 4: Ignoring Statistical Significance

"Version A had 22% open rate, Version B had 19% open rate, so A wins." This logic ignores whether the difference is statistically meaningful or random chance.

Use significance testing tools built into platforms like Groupmail's analytics system or standalone calculators to validate results.

⚠️ Testing Reality Check: 70% of A/B tests show no statistically significant difference between variants. This doesn't mean testing failed—it means your original approach was already optimized for that particular variable.

Tools and Platforms for Email A/B Testing

Quick Answer: Effective email A/B testing requires platforms with built-in testing capabilities, statistical significance calculations, and detailed analytics. Groupmail offers comprehensive testing features with unlimited sending, while alternatives include Mailchimp, ConvertKit, and Campaign Monitor, each with different strengths and limitations.

Groupmail: Comprehensive Testing with Unlimited Sending

At Groupmail, we've built testing capabilities directly into our platform because we understand how crucial optimization is for email success. Our drag-and-drop builder includes A/B testing functionality that handles sample size calculations, statistical significance measurement, and automatic winner selection.

Key advantages:

- Unlimited email sending through flexible SMTP integration with providers like SendGrid and SMTP2GO

- Built-in statistical significance calculator prevents premature conclusions

- Automated winner deployment to remaining subscribers

- Revenue tracking integration beyond basic open/click metrics

- Exclusive SMTP2GO offer: 10,000 free emails monthly for Groupmail customers who integrate

The platform automatically segments test groups randomly and provides clear reporting on which variations achieve better results across multiple metrics.

Alternative Platforms Comparison

| Platform | A/B Testing | Sample Size Help | Statistical Significance | Pricing Model |

|---|---|---|---|---|

| Groupmail | Advanced | Automatic | Built-in | Unlimited sending |

| Mailchimp | Standard | Manual calculation | Manual | Per-contact fees |

| ConvertKit | Basic | No guidance | No | Tiered pricing |

| Campaign Monitor | Standard | Limited guidance | Basic | Per-send costs |

Testing-Specific Tools

For businesses using multiple email platforms or requiring advanced testing capabilities, standalone tools offer additional functionality:

Optimizely for Email: Advanced multivariate testing with sophisticated statistical analysis VWO for Email: Heat mapping and user behavior analysis integration

Google Analytics: UTM tracking for detailed conversion analysis Litmus Analytics: Email client performance and engagement tracking

📊 Platform Integration: The most successful email testing programs integrate with broader analytics systems. Google Analytics email tracking provides deeper conversion insights than email platform metrics alone.

Ready to start testing systematically? Groupmail's free account includes full A/B testing capabilities with no sending limits.

Measuring Success: Key Metrics Beyond Open Rates

Quick Answer: Successful email A/B testing focuses on revenue-driving metrics including click-through rates, conversion rates, revenue per email, and customer lifetime value impact. Open rates, while important, don't correlate directly with business results and can mislead optimization efforts.

Revenue-Focused Metrics That Matter

Open rates grab attention, but smart email marketers optimize for metrics that directly impact business growth. DMA Email Marketing ROI research shows the strongest performing campaigns prioritize conversion metrics over engagement vanity metrics.

Primary success metrics:

- Click-through rate (CTR): Percentage of recipients who click email links

- Conversion rate: Percentage completing desired actions (purchase, signup, download)

- Revenue per email: Total revenue generated divided by emails sent

- Customer lifetime value (CLV) impact: Long-term value of email-acquired customers

Secondary engagement metrics:

- Forward/share rate: Viral potential and content quality indicator

- Time spent on landing page: Content relevance and user interest

- Multi-email engagement: How testing affects subsequent campaign performance

- Unsubscribe rate: Audience satisfaction and content-market fit

Tracking Implementation Strategy

Most businesses struggle with proper conversion tracking, limiting their ability to optimize for revenue rather than engagement. Here's our systematic approach:

1. UTM Parameter Setup Use consistent UTM codes for each email variant:

utm_source=emailutm_medium=ab-testutm_campaign=campaign-nameutm_content=variant-aorvariant-b

2. Goal Configuration

Set up specific goals in Google Analytics or your analytics platform:

- Macro conversions: Purchases, subscriptions, high-value actions

- Micro conversions: PDF downloads, video views, content engagement

- Revenue goals: Actual dollar amounts rather than just conversion counts

3. Attribution Modeling Email often initiates customer journeys that convert through other channels. Multi-touch attribution research shows email influences 21% more conversions than last-click attribution reveals.

💰 Revenue Reality: Emails with 15% open rates but 8% click-through rates often generate more revenue than emails with 25% open rates but 3% click-through rates. Always prioritize metrics closest to your business goals.

Long-term Performance Analysis

A/B testing insights compound over time. Track these longer-term metrics:

Quarterly analysis:

- Revenue per subscriber trends

- List quality improvements

- Customer acquisition cost changes

- Overall email program ROI growth

Annual assessment:

- Subscriber lifetime value improvements

- Testing program efficiency

- Organizational learning and capability building

- Competitive performance benchmarks

The most successful email programs at companies we work with see 40-60% improvement in email ROI within 12 months of implementing systematic A/B testing programs.

Key Terms

A/B Testing: Comparing two email versions to determine which performs better based on specific metrics Click-Through Rate (CTR): Percentage of email recipients who click on links within the email campaign

Conversion Rate: Percentage of email recipients who complete a desired action after clicking through Statistical Significance: Mathematical confidence level (typically 95%) that test results represent true differences, not random variation Sample Size: Number of email recipients required for reliable test results, typically minimum 1,000 per variantMultivariate Testing: Testing multiple email elements simultaneously, requiring larger sample sizes than A/B testsPreview Text: Text snippet displayed alongside subject line in email clients, affecting open rates UTM Parameters:Tracking codes added to email links for detailed analytics and conversion attribution Open Rate: Percentage of recipients who open an email, though less reliable due to privacy changes Revenue Per Email (RPE): Total revenue generated divided by emails sent, key performance indicator for email ROI

Frequently Asked Questions

How long should I run an email A/B test before determining a winner? Run tests for at least one complete business cycle, typically 7-14 days. This accounts for day-of-week variations and ensures you're measuring true performance differences rather than timing coincidences. Shorter tests often produce misleading results that lead to poor optimization decisions.

What's the minimum list size needed for reliable email A/B testing? You need minimum 1,000 recipients per test variant to detect a 20% performance improvement with 95% confidence. This means 2,000 total subscribers for basic A/B tests. Smaller lists should focus on list building before testing, as results from smaller samples often mislead rather than guide optimization efforts.

Should I test multiple email elements simultaneously or one at a time? Test one variable at a time for clear, actionable results. Testing subject line, send time, and content simultaneously makes it impossible to determine which change drove performance improvements. Sequential testing takes longer but provides reliable insights you can build upon systematically.

Which email metrics should I focus on when A/B testing campaigns? Focus on metrics closest to your business goals: click-through rates, conversion rates, and revenue per email. Open rates, while popular, don't correlate directly with business results and can mislead optimization efforts. Revenue-focused testing typically improves email ROI by 35-60% compared to engagement-only optimization.

How do I know if my A/B test results are statistically significant? Use built-in significance calculators in your email platform or online statistical significance tools. Look for 95% confidence levels before declaring winners. Many email platforms, including Groupmail, automatically calculate significance and recommend when to conclude tests, removing guesswork from the process.

Can I A/B test with small business email lists under 2,000 subscribers? Small lists should focus on growth before testing, as results from fewer than 1,000 recipients per variant often represent random variation rather than true performance differences. Instead, study successful email examples in your industry and implement proven best practices while building your subscriber base.

What's the biggest mistake businesses make with email A/B testing? The most costly mistake is calling winners too early with insufficient data. Many businesses declare victory after 24-48 hours with small sample sizes, leading to false conclusions and poor optimization decisions. Proper testing requires patience, adequate sample sizes, and statistical validation.

Does email A/B testing work for B2B companies differently than B2C? B2B email testing often requires longer test periods due to longer sales cycles and smaller target audiences. B2B tests should focus on content value and professional tone variations, while B2C tests can emphasize emotional triggers and urgency. However, the fundamental testing methodology remains the same across business models.

Email A/B testing transforms marketing from guesswork into a systematic, data-driven process that consistently improves results. The businesses we work with that implement regular testing see average improvements of 37% in open rates and 49% in click-through rates within their first year.

Success requires patience, proper methodology, and focus on metrics that actually drive revenue. Start with high-impact elements like subject lines, ensure adequate sample sizes, and always prioritize statistical significance over quick wins.

The compounding effect of systematic testing creates sustainable competitive advantages. Each optimization builds upon previous results, creating email programs that consistently outperform industry benchmarks and drive measurable business growth.

Start creating professional email campaigns with Groupmail's free account—no credit card required. Access unlimited sending and our live AI subject line generator today.